Kubernetes для бедных. Готовим инфраструктуру

Начнём создание кластера Kubernetes согласно подготовленому плану.

Создадим в облаке Hetzner четыре виртуалки c Ubuntu 16.04. Один мастер и три рабочих узла. Настроим доступы и поставим все необходимые пакеты.

Подготовка рабочей станции

На рабочей станции понадобятся Ansible, Terraform и плагин terraform-provider-hcloud. Всё ставим согласно официальным инструкциям:

Сценарии, необходимые для первоначальной настройки виртуалок, лежат на GitLab. После их клонирования нужно инициализировать каталог для работы terraform.

git clone https://gitlab.com/alxrem/kube4blog.git cd kube4blog terraform init

Создание серверов

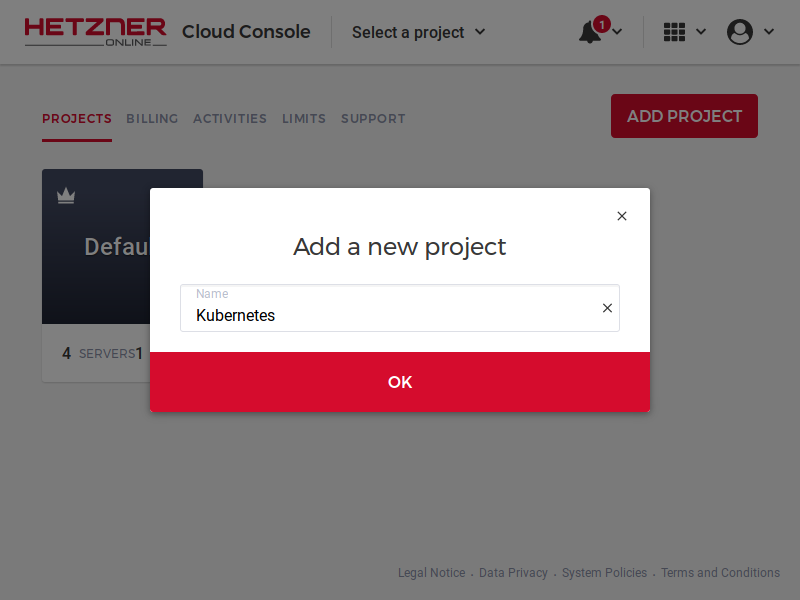

В консоли облака заведём отдельный проект,

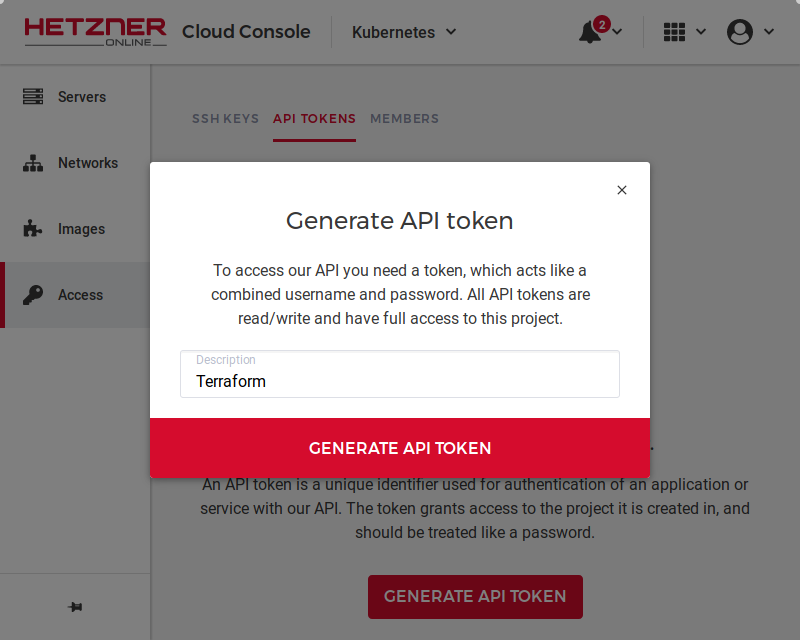

в нём создадим токен для доступа к API.

Токен нужно сразу скопировать, больше его не покажут. После этого его лучше записать в файл kubernetes.auto.tfvars на одном уровне с файлом kubernetes.tf.

api_token = "X8ttSA2GZD1UMIIbwsrzH8J3Ez8H8grNFGvoiYNjO3JiFS1XMYNSg5pNac6no5zt"

Или можно сохранить его отдельно и вводить каждый раз при запуске terraform.

terraform загрузит файл ~/.ssh/id_rsa.pub в проект облака для установки в качестве ключа учётной записи администратора серверов. Чтобы использовать другой ключ, нужно отредактировать в файле kubernetes.tf секцию

resource "hcloud_ssh_key" "default" {

name = "admin"

public_key = "${file("~/.ssh/id_rsa.pub")}"

}

Просмотрим план изменений, которые собирается сделать terraform:

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

hcloud_ssh_key.default: Refreshing state... (ID: 24573)

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ hcloud_server.master1

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "master1"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node1

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node1"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node2

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node2"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node3

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node3"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_ssh_key.default

id: <computed>

fingerprint: <computed>

name: "admin"

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC9yLo1uGqV+8Is7MVaiauOBPwgJJBdLSHinBP+OT0NHE9A1zjFY23nVYaCoXNJky0w2V6mSXSUpljZZ6yhfRj8hf+Drf7x0LLdKF0lfxMjAjRc8oVtwYN+88IeIGRJLeSLDrCH+rpGmJe1YF2M0K/CDuiHEHBVM1VBjLlBM/zNv16XEf0c1zDmBDAGejGy6tZB3zhMeG6/2IYwyTYolzv+crWcXd63Lgw7a2BdqLFDrKcenwoMGigyFPoXiqzSfo51uF95I2F21OmkgZ1y/uaqlAuX6EJFayCzODzlNuYiZ9ea4COS+8nN5B/tPIwv9az/7VUZ7Jz1uBs7dv51aoHN vpupkin@localhost"

Plan: 5 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

terraform собирается создать 4 сервера и один ключ ssh, ровно то, что нужно. Применяем изменения. Будет снова показан план, с которым нужно будет согласиться, введя yes:

$ terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ hcloud_server.master1

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "master1"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node1

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node1"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node2

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node2"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_server.node3

id: <computed>

datacenter: <computed>

image: "ubuntu-16.04"

ipv4_address: <computed>

ipv6_address: <computed>

keep_disk: "false"

location: <computed>

name: "node3"

server_type: "cx11"

ssh_keys.#: "1"

ssh_keys.0: "admin"

status: <computed>

+ hcloud_ssh_key.default

id: <computed>

fingerprint: <computed>

name: "admin"

public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC9yLo1uGqV+8Is7MVaiauOBPwgJJBdLSHinBP+OT0NHE9A1zjFY23nVYaCoXNJky0w2V6mSXSUpljZZ6yhfRj8hf+Drf7x0LLdKF0lfxMjAjRc8oVtwYN+88IeIGRJLeSLDrCH+rpGmJe1YF2M0K/CDuiHEHBVM1VBjLlBM/zNv16XEf0c1zDmBDAGejGy6tZB3zhMeG6/2IYwyTYolzv+crWcXd63Lgw7a2BdqLFDrKcenwoMGigyFPoXiqzSfo51uF95I2F21OmkgZ1y/uaqlAuX6EJFayCzODzlNuYiZ9ea4COS+8nN5B/tPIwv9az/7VUZ7Jz1uBs7dv51aoHN vpupkin@localhost\n"

Plan: 5 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

hcloud_ssh_key.default: Creating...

fingerprint: "" => "<computed>"

name: "" => "admin"

public_key: "" => "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC9yLo1uGqV+8Is7MVaiauOBPwgJJBdLSHinBP+OT0NHE9A1zjFY23nVYaCoXNJky0w2V6mSXSUpljZZ6yhfRj8hf+Drf7x0LLdKF0lfxMjAjRc8oVtwYN+88IeIGRJLeSLDrCH+rpGmJe1YF2M0K/CDuiHEHBVM1VBjLlBM/zNv16XEf0c1zDmBDAGejGy6tZB3zhMeG6/2IYwyTYolzv+crWcXd63Lgw7a2BdqLFDrKcenwoMGigyFPoXiqzSfo51uF95I2F21OmkgZ1y/uaqlAuX6EJFayCzODzlNuYiZ9ea4COS+8nN5B/tPIwv9az/7VUZ7Jz1uBs7dv51aoHN vpupkin@localhost\n"

hcloud_ssh_key.default: Creation complete after 0s (ID: 27365)

hcloud_server.node1: Creating...

datacenter: "" => "<computed>"

image: "" => "ubuntu-16.04"

ipv4_address: "" => "<computed>"

ipv6_address: "" => "<computed>"

keep_disk: "" => "false"

location: "" => "<computed>"

name: "" => "node1"

server_type: "" => "cx11"

ssh_keys.#: "" => "1"

ssh_keys.0: "" => "admin"

status: "" => "<computed>"

hcloud_server.node2: Creating...

datacenter: "" => "<computed>"

image: "" => "ubuntu-16.04"

ipv4_address: "" => "<computed>"

ipv6_address: "" => "<computed>"

keep_disk: "" => "false"

location: "" => "<computed>"

name: "" => "node2"

server_type: "" => "cx11"

ssh_keys.#: "" => "1"

ssh_keys.0: "" => "admin"

status: "" => "<computed>"

hcloud_server.master1: Creating...

datacenter: "" => "<computed>"

image: "" => "ubuntu-16.04"

ipv4_address: "" => "<computed>"

ipv6_address: "" => "<computed>"

keep_disk: "" => "false"

location: "" => "<computed>"

name: "" => "master1"

server_type: "" => "cx11"

ssh_keys.#: "" => "1"

ssh_keys.0: "" => "admin"

status: "" => "<computed>"

hcloud_server.node3: Creating...

datacenter: "" => "<computed>"

image: "" => "ubuntu-16.04"

ipv4_address: "" => "<computed>"

ipv6_address: "" => "<computed>"

keep_disk: "" => "false"

location: "" => "<computed>"

name: "" => "node3"

server_type: "" => "cx11"

ssh_keys.#: "" => "1"

ssh_keys.0: "" => "admin"

status: "" => "<computed>"

hcloud_server.node1: Still creating... (10s elapsed)

hcloud_server.node2: Still creating... (10s elapsed)

hcloud_server.master1: Still creating... (10s elapsed)

hcloud_server.node3: Still creating... (10s elapsed)

hcloud_server.node1: Still creating... (20s elapsed)

hcloud_server.node2: Still creating... (20s elapsed)

hcloud_server.master1: Still creating... (20s elapsed)

hcloud_server.node3: Still creating... (20s elapsed)

hcloud_server.node1: Still creating... (30s elapsed)

hcloud_server.node2: Still creating... (30s elapsed)

hcloud_server.master1: Still creating... (30s elapsed)

hcloud_server.node3: Still creating... (30s elapsed)

hcloud_server.node1: Creation complete after 33s (ID: 617704)

hcloud_server.node2: Creation complete after 34s (ID: 617707)

hcloud_server.master1: Creation complete after 35s (ID: 617706)

hcloud_server.node3: Still creating... (40s elapsed)

hcloud_server.node3: Creation complete after 42s (ID: 617705)

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

Начальная настройка серверов

Виртуальные машины настроим при помощи ansible.

Сценарий ansible, если нужно, перенастраивает доступы к серверам по ssh, приводит имена хостов к именам виртуальных машин, устанавливает и настраивает docker, устанавливает пакеты kubernetes.

При запуске сценария без дополнительных настроек доступы к серверам не изменяются.

Поведение можно изменить, добавив переменные в файл group_vars/all:

admin_user_name: vpupkin

При установке переменной admin_user_name будет создана учётная запись vpupkin, ключ для неё будет скопирован из учётной записи root, доступ для root по ssh будет заблокирован, а для vpupkin будет разрешено использование sudo без пароля.

admin_user_password: $6$L8QheNz2$wYANpJVXkrQhKkDzY8cQq5Cm4LtDZJQUvS5s9aJoNhS0LWbcx05EXQT83L15LDW0

Если вдобавок к admin_user_name установлена переменная admin_user_password, будет требоваться пароль для запуска sudo. Так же при использовании этой переменной нужно будет запускать ansible-playbook с ключом -K и вводить этот пароль, так как после создания новой учётной записи сервер будет настраиваться через неё.

admin_user_keys: | ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC9yLo1uGqV+8Is7MVaiauOBPwgJJBdLSHinBP+OT0NHE9A1zjFY23nVYaCoXNJky0w2V6mSXSUpljZZ6yhfRj8hf+Drf7x0LLdKF0lfxMjAjRc8oVtwYN+88IeIGRJLeSLDrCH+rpGmJe1YF2M0K/CDuiHEHBVM1VBjLlBM/zNv16XEf0c1zDmBDAGejGy6tZB3zhMeG6/2IYwyTYolzv+crWcXd63Lgw7a2BdqLFDrKcenwoMGigyFPoXiqzSfo51uF95I2F21OmkgZ1y/uaqlAuX6EJFayCzODzlNuYiZ9ea4COS+8nN5B/tPIwv9az/7VUZ7Jz1uBs7dv51aoHN vpupkin@localhost

При установке переменной admin_user_keys её содержимое будет записано в ~/.ssh/authorized_keys вместо инсталляционного ключа.

ssh_port: 2222

При установке переменной ssh_port будет изменён порт, который будет слушать демон sshd.

В качестве inventory нужно указывать скрипт terraform-hetzner-inventory, который извлекает имена и адреса серверов из файла terraform.tfstate.

Запустим настройку серверов без дополнительных переменных:

$ ansible-playbook -i terraform-hetzner-inventory bootstrap.yml

PLAY [all] ********************************************************************

TASK [Ensure python] **********************************************************

changed: [node1]

changed: [node2]

changed: [master1]

changed: [node3]

PLAY [all] ********************************************************************

TASK [Gathering Facts] ********************************************************

ok: [node3]

ok: [node1]

ok: [node2]

ok: [master1]

TASK [sudo : Install package] *************************************************

included: /roles/sudo/tasks/package_apt.yml for node1, node2, master1, node3

TASK [sudo : Install package] *************************************************

ok: [node1]

ok: [node2]

ok: [master1]

ok: [node3]

TASK [sudo : Define OS-specific variables] ************************************

ok: [node1]

ok: [node2]

ok: [master1]

ok: [node3]

TASK [sudo : Export sudo grop] ************************************************

ok: [node1]

ok: [node2]

ok: [master1]

ok: [node3]

TASK [sudo : Configure sudoers] ***********************************************

changed: [node3]

changed: [master1]

changed: [node2]

changed: [node1]

TASK [admin_user : Get users] *************************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Delete current user] ***************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Get groups] ************************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Delete current group] **************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure group] **********************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure user] ***********************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure user is in sudo group] ******************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure home] ***********************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure ssh dir] ********************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure authorized keys] ************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [admin_user : Ensure authorized keys] ************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

PLAY [all] ********************************************************************

TASK [Gathering Facts] ********************************************************

ok: [master1]

ok: [node3]

ok: [node2]

ok: [node1]

TASK [Ensure root home] *******************************************************

ok: [node3]

ok: [node2]

ok: [node1]

ok: [master1]

TASK [Ensure hostname] ********************************************************

ok: [node3]

ok: [node1]

ok: [node2]

ok: [master1]

TASK [Detect cloud config] ****************************************************

ok: [node3]

ok: [master1]

ok: [node2]

ok: [node1]

TASK [Change hostname in cloud.conf] ******************************************

changed: [node3]

changed: [node1]

changed: [master1]

changed: [node2]

TASK [Install basic packages] *************************************************

ok: [node1] => (item=bash-completion)

ok: [node3] => (item=bash-completion)

ok: [master1] => (item=bash-completion)

ok: [node2] => (item=bash-completion)

ok: [node3] => (item=less)

ok: [master1] => (item=less)

ok: [node1] => (item=less)

ok: [node2] => (item=less)

ok: [master1] => (item=vim)

ok: [node3] => (item=vim)

ok: [node1] => (item=vim)

ok: [node2] => (item=vim)

TASK [ssh : Open SSH port on firewalld] ***************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [ssh : Install package] **************************************************

included: /roles/ssh/tasks/package_apt.yml for node1, node2, master1, node3

TASK [ssh : Install package] **************************************************

ok: [node1]

ok: [node2]

ok: [node3]

ok: [master1]

TASK [ssh : Configure SSH] ****************************************************

changed: [node3] => (item={'key': u'X11Forwarding', 'value': u'no'})

changed: [master1] => (item={'key': u'X11Forwarding', 'value': u'no'})

changed: [node1] => (item={'key': u'X11Forwarding', 'value': u'no'})

changed: [node2] => (item={'key': u'X11Forwarding', 'value': u'no'})

ok: [node3] => (item={'key': u'Port', 'value': 22})

ok: [node1] => (item={'key': u'Port', 'value': 22})

ok: [master1] => (item={'key': u'Port', 'value': 22})

ok: [node2] => (item={'key': u'Port', 'value': 22})

TASK [ssh : Disable root login] ***********************************************

skipping: [node1] => (item={'key': u'PermitRootLogin', 'value': u'no'})

skipping: [node2] => (item={'key': u'PermitRootLogin', 'value': u'no'})

skipping: [master1] => (item={'key': u'PermitRootLogin', 'value': u'no'})

skipping: [node3] => (item={'key': u'PermitRootLogin', 'value': u'no'})

RUNNING HANDLER [ssh : Restart SSH] *******************************************

changed: [node3]

changed: [node2]

changed: [master1]

changed: [node1]

PLAY [all] ********************************************************************

TASK [Gathering Facts] ********************************************************

skipping: [node2]

skipping: [node1]

skipping: [master1]

skipping: [node3]

TASK [Disable root password] **************************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

TASK [Delete root keys] *******************************************************

skipping: [node1]

skipping: [node2]

skipping: [master1]

skipping: [node3]

PLAY [all] ********************************************************************

TASK [Gathering Facts] ********************************************************

ok: [node3]

ok: [node2]

ok: [node1]

ok: [master1]

TASK [docker : Detect repository] *********************************************

included: /roles/docker/tasks/repository_apt.yml for node1, node2, master1, node3

TASK [docker : Ensure original packages are absent] ***************************

ok: [node1] => (item=[u'docker', u'docker-engine', u'docker.io'])

ok: [node3] => (item=[u'docker', u'docker-engine', u'docker.io'])

ok: [node2] => (item=[u'docker', u'docker-engine', u'docker.io'])

ok: [master1] => (item=[u'docker', u'docker-engine', u'docker.io'])

TASK [docker : Ensure https transport] ****************************************

ok: [node3]

ok: [node1]

ok: [master1]

ok: [node2]

TASK [docker : Ensure GPG key of repository] **********************************

changed: [node1]

changed: [node3]

changed: [node2]

changed: [master1]

TASK [docker : Ensure docker repository] **************************************

changed: [node1]

changed: [node2]

changed: [master1]

changed: [node3]

TASK [docker : Ensure docker package] *****************************************

changed: [node1]

changed: [node2]

changed: [master1]

changed: [node3]

TASK [docker : Ensure docker config dir] **************************************

ok: [node3]

ok: [node1]

ok: [master1]

ok: [node2]

TASK [docker : Configure docker] **********************************************

changed: [node1]

changed: [node3]

changed: [master1]

changed: [node2]

TASK [docker : Start docker] **************************************************

ok: [node3]

ok: [node1]

ok: [node2]

ok: [master1]

TASK [kubernetes : Detect repository] *****************************************

included: /roles/kubernetes/tasks/repository_apt.yml for node1, node2, master1, node3

TASK [kubernetes : Ensure https transport] ************************************

ok: [node1]

ok: [node3]

ok: [master1]

ok: [node2]

TASK [kubernetes : Ensure GPG key of repository] ******************************

changed: [node1]

changed: [node3]

changed: [node2]

changed: [master1]

TASK [kubernetes : Ensure repository] *****************************************

changed: [node1]

changed: [node2]

changed: [node3]

changed: [master1]

TASK [kubernetes : Ensure packages] *******************************************

changed: [node2] => (item=[u'kubelet', u'kubeadm', u'kubectl'])

changed: [node1] => (item=[u'kubelet', u'kubeadm', u'kubectl'])

changed: [node3] => (item=[u'kubelet', u'kubeadm', u'kubectl'])

changed: [master1] => (item=[u'kubelet', u'kubeadm', u'kubectl'])

PLAY RECAP ********************************************************************

master1 : ok=32 changed=12 unreachable=0 failed=0

node1 : ok=32 changed=12 unreachable=0 failed=0

node2 : ok=32 changed=12 unreachable=0 failed=0

node3 : ok=32 changed=12 unreachable=0 failed=0

В результате успешной отработки сценария ansible мы получим серверы, подготовленые для Kubernetes.

Следующий шаг - создание кластера.